Revelations of AI: The Materialist Perspective

Background

From the very first day ChatGPT went online🌐, I rushed home from work to try it out, and I found myself chatting into the wee hours of the night. Although the earliest version wasn’t exactly a genius, it was already hinting at the future of Large Language Models (LLMs), and that night can only be described as a whirlwind of surprises🤩. I was so excited that I hardly slept💤 at all.

Over the following period, I continued to explore related topics. One question constantly plagued me: “🤔Why?” Why could such a simple structure like the transformer exhibit “intelligence”?

I tried to organize a framework to understand AI, hoping to clarify some of my confusions.

Here are my thoughts (strictly from a materialistic perspective):

- 🧠 The human brain is actually quite simple

- 💡 The intelligence understood by humans has always been at a rather low level

- 🤖 The training principles of AI can be used to understand the learning processes of the human brain

- 🔧 The training principles of AI can be used to enhance overall human capabilities

🧠 The Simplicity of the Human Brain

If you believe that humans evolved through natural selection, you would understand that various organs are just “lucky” survivors. Is the brain🧠 an anomaly compared to other organs? While other organs are straightforward in structure and function, why is the brain considered exceptionally “complex”?

The complex part of the brain is consciousness. If you are a materialist, consciousness is merely a result of brain activity, not a real entity. By focusing on the material aspects, you can see that the physical structure of the brain, like other organs, is also quite simple.

💡 Are Humans Really That Smart?

We often consider ourselves the smartest beings on Earth, but in reality, we tend to solve problems only within known methods, ignoring new possibilities. We chase short-term gains, overlook long-term impacts, and often overestimate our intelligence, confusing understanding with mastery. Despite significant human achievements, we still need to humbly acknowledge our intellectual limits and use this understanding as a basis for learning and improvement.

This reminds me of my childhood observations of ants🐜:

As a child, I was always curious about the ants swarming at construction sites. To the ants, these sites changed too quickly, their “homes” were frequently destroyed, yet they persisted in repairing them instead of finding more suitable living conditions.

As an adult, I realized that in many ways, humans are not much smarter than ants. Faced with ongoing natural disasters🌋, we still cling to areas prone to earthquakes, floods🌊, or deserts, repeating a cycle of destruction and rebuilding.

In the natural world, countless animals build nests and migrate. Are the bizarre inventions of humans really more advanced than birds building nests or bees constructing hives? When extraterrestrial intelligences visit Earth, they might just say, “Oh, there are various bugs here, some can fly, others can’t but try to fly using strange contraptions, and they don’t even fly well.”

In the natural world, countless animals build nests and migrate. Are the bizarre inventions of humans really more advanced than birds building nests or bees constructing hives? When extraterrestrial intelligences visit Earth, they might just say, “Oh, there are various bugs here, some can fly, others can’t but try to fly using strange contraptions, and they don’t even fly well.”

Understanding the “Human Brain”🧠 from AI Principles🤖

The core of endowing machines with “intelligence” today is neural networks. Setting aside the details, let’s try to understand what a neural network is in simple terms.

🧑🍳 It’s a Chef Who Takes Feedback

Consider a neural network as a chef:

- You provide tomatoes and eggs and ask him to make scrambled eggs with tomatoes.

- After tasting it, you find it’s not to your liking and tell him how you expect it to taste.

- Then, he adjusts his cooking method (perhaps using less oil or salt).

- After making a hundred thousand dishes, through continuous feedback, you train a chef with excellent culinary skills.

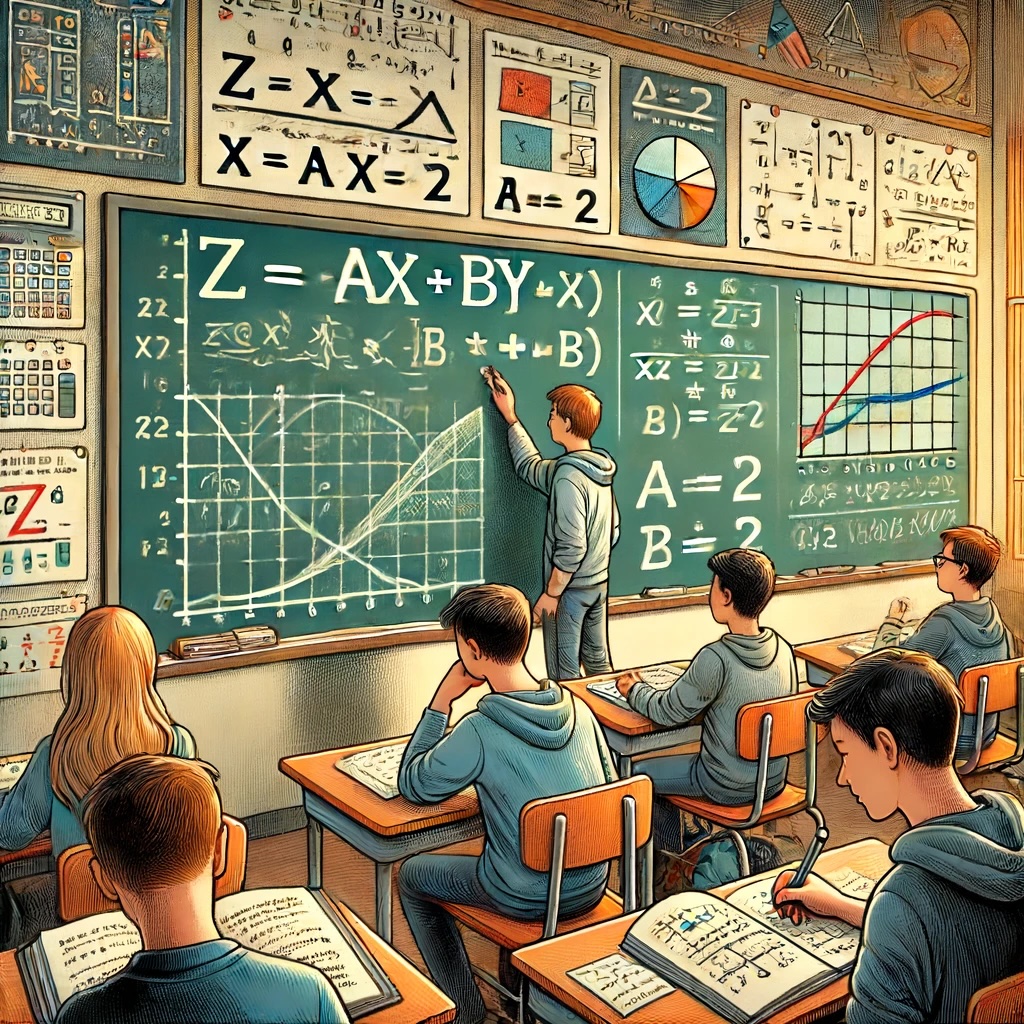

📚 It’s a Familiar Equation

Remember the middle school equation $z = ax + by$?

- $xy$ are the inputs, $z$ is the output, and $ab$ are the parameters.

- Set $a=1, b=2$, give a set of $xy$ values, and you can calculate a $z$ value.

- If the $z$ value deviates from the expected, adjust $ab$ to bring $z$ closer to the correct answer.

- With numerous $xy$ inputs and $z$ corrections, $ab$ stabilizes, giving you a formula to solve problems.

🤖=🧑🍳 ChatGPT is Also a Chef

In the previous two examples, the final trained chef and problem-solving formula are known as “models” in the AI field. We can train various models to solve different problems, such as identifying objects in photos, classifying images, or predicting the next part of a text.

Now, let’s talk about ChatGPT; simply put, it’s just a formula for guessing the next word.

To elaborate a bit more:

- it has hundreds of billions of parameters

- uses a very creative attention mechanism

- hides part of a complete article to let it guess the hidden part and corrects it with the correct answer

and more similar interesting little innovations combined can train a model that chats like a “human”.

🚀 How to Apply This in Life and Benefit From It

Since the release of ChatGPT 3.5, LLMs have exploded in popularity, with various companies and even small teams training their models, improving effectiveness.

Here are some common methods to enhance large model capabilities:

- 📈 Increase the number of model parameters.

- 🌟 Improve the quality and diversity of training data.

- 🎨 Multimodal training (combining more types of data, such as images, videos, audio, text, etc.).

Let’s see how these points apply to training ourselves or raising children:

📈 Increasing Model Parameters

To enable large models to run on different devices, open-source projects often provide multiple versions of the model, differing only in the number of parameters. For example, the 70B llama3 is far superior to the 8B version.

The number of model parameters can be likened to the number of neural connections in the human brain. While genetics play a significant role, there are methods to enhance this postnatally, including:

- 🎓 learning new skills

- 🏋️ engaging in physical and intellectual exercise

- 👥 maintaining social interactions

- 🍎 consuming a nutritious diet

- 💤 getting adequate sleep

- 🧘 effectively managing stress

🌟 Improving the Quality and Diversity of Training Data

For humans, everything we see, hear, and feel daily constitutes training data. If our goal is to enhance brain capabilities, we can focus on the following:

- Quality:

- 🏛️ Read historically verified masterpieces instead of frivolous novels.

- 📚 Systematically study professional textbooks or papers, rather than absorbing scattered knowledge points forwarded by relatives.

- 🎥 Watch high-rated documentaries instead of short videos that only provide fleeting emotional value.

- Diversity:

- From the improvement of LLMs, increasing knowledge breadth can enhance model generalization ability, thereby effectively improving comprehensive problem-solving capabilities.

- Learn about various disciplines and different types of knowledge.

- Try doing things you’ve never done before.

🎨 Multimodal Training

For humans, multimodality includes visual, auditory, tactile, gustatory, and olfactory senses. Using multiple sensory abilities simultaneously constitutes multimodal training.

At a kindergarten orientation for parents, an education expert presented a multiple-choice question. The question asked which activity could best develop a child’s brain: watching TV, reading, listening to music, or eating independently. The expert’s answer was eating independently. This is because, during the eating process, a child’s eyes are watching, hands are moving, nose is smelling, tongue is tasting, and ears are listening. All sensory organs are engaged.

So, a better approach would be:

- 🎣 Go fishing personally, rather than playing an electronic fishing game.

- 🥾 Go hiking yourself, instead of watching the scenery through a screen.

- 🏰 Walk the paths ancient people walked, rather than relying purely on textual imagination.

📚 Additional: Reading is Still the Most Efficient Method

Although modern technology offers various media, I believe that reading remains the most efficient method for humans. Consider how long it takes you to read a novel; if you were to adapt all its content into a TV series, how many episodes would it take to cover everything?

❓ Misconception: Is Forgotten Knowledge Wasted?

I once believed that if I read so many books and memorized so many poems and songs but couldn’t remember them, it was all for naught. However, in my conversations with ChatGPT, I found it could solve various problems yet couldn’t accurately recite a single poem like “Mulan Ci”. This realization suddenly resolved years of confusion. During the learning process, my brain structure had changed. Even if I couldn’t remember the exact words or even the gist of the books, these changes in my brain still influence my behavior and offer assistance.

🎯 Conclusion

In this rapidly developing era, we can learn a lot from AI. Understanding AI is not just about keeping up with technological trends but also about better understanding ourselves, uncovering our potential, and enhancing our lives.

The ancient maxim “Know thyself,” inscribed at the Temple of Delphi, has guided generations, and today, my understanding of myself has deepened slightly.

💡 As AI technology continues to develop and permeate, people will start to ponder deeper questions, such as:

- Will AGI have consciousness like humans?

- If AGI behaves exactly like a human, is it really human?

- Should AGI have human rights?

- Given our insignificance, how do we maintain optimism?

💡 This article reflects purely materialistic thinking. I’ll try to supplement it with other perspectives in the future. From this article, you might also have noticed some questions worth discussing, such as:

- What is consciousness?

- What is the relationship between consciousness and matter?

I will try to explore these issues in another article.